The EU AI Act: Essential Next Steps for Fintech and Retail Trading Professionals

The EU AI Act is in force and key enforcement deadlines have passed, making AI governance and compliance requirements legally binding for fintech firms, retail brokers, and their technology providers.

The EU Artificial Intelligence Act is now in force, with key obligations already applying to fintech firms and retail trading professionals. Effective since August 2024 and entering an active enforcement phase in August 2025, the Act introduces binding requirements on general-purpose AI, governance, and penalties. As a result, both retail brokers and their technology providers must already ensure compliance with the AI Act’s core provisions.

Regulation Overview

The EU Artificial Intelligence Act (AI Act) is in effect, and the first enforcement deadlines have already passed. Entering into force on 1 August 2024, the Act set a 12-month clock for the first obligations, which came due earlier last month.

As of 2 August 2025, core provisions around general-purpose AI (GPAI), governance, and penalties are live. This means that both retail brokers and technology vendors serving them are already subject to active legal requirements.

For retail brokers, CFD/FX platforms, and technology providers in this market, this impacts:

- Client onboarding automation (AI-powered KYC/AML tools)

- Trade execution & risk models (AI-assisted order routing, risk profiling)

- Fraud detection & surveillance (AI monitoring of transactions and suspicious trading patterns)

- Customer engagement tools (chatbots, automated market content, personalized client offers)

The transition from “prepare for the AI Act” to “you are now bound by it” has happened. If you haven’t aligned your systems and vendor relationships to the new rules, you are already behind.

Who Does the AI Act Apply To?

The AI Act covers both:

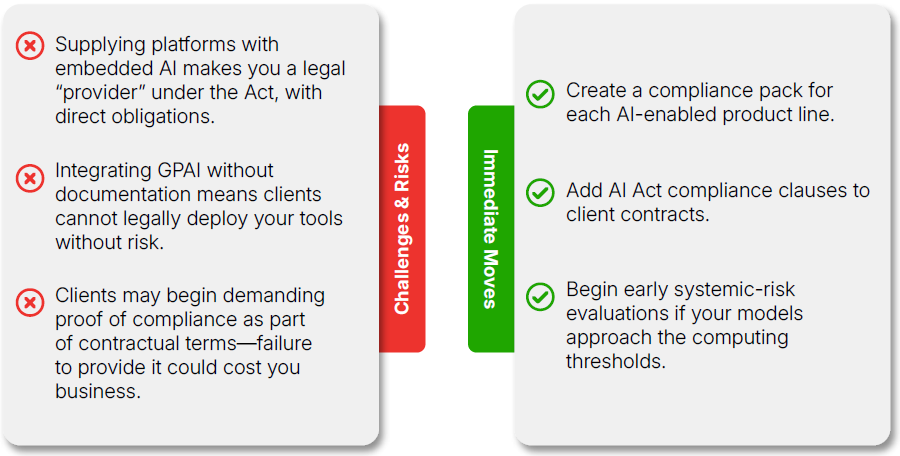

- Providers – Those developing, integrating, or placing an AI system or GPAI model on the EU market (including tech vendors selling white-label platforms).

- Deployers – Businesses using AI in a professional capacity (brokers using vendor-supplied AI tools).

In the Fintech/Trading sector, that means:

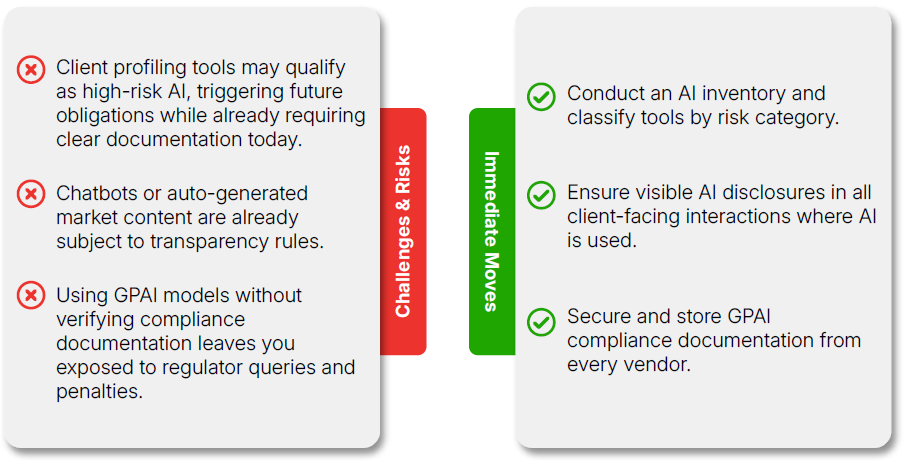

- Retail Brokers – Using AI for client risk profiling, personalized trading insights, onboarding verification.

- Fintechs & Payment Platforms – Applying AI to credit scoring, fraud detection, or compliance checks.

- Technology Vendors – Embedding AI into platforms, analytics tools, or automated decision-making systems.

- Third-Party Data Providers – Offering AI-powered analytics, sentiment models, or fraud detection feeds.

What Has Changed?

1. GPAI Obligations Now in Force (from 2 August 2025)

All GPAI model providers—including those integrating third-party large language models into platforms—must now:

- Maintain technical documentation on training, testing, and evaluation.

- Publish a training data summary in line with AI Office templates.

- Comply with EU copyright rules for AI outputs.

- Provide usage instructions to downstream clients.

Impact:

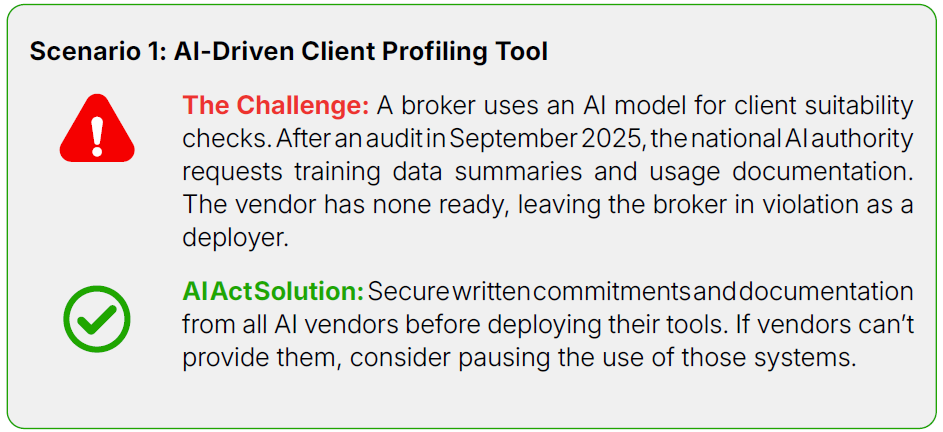

For brokers, this means you must demand compliance documentation from technology vendors and store it for audits. For vendors, you are now legally required to have these materials ready before selling or updating AI-enabled products. Failure to do so can immediately expose both parties to regulator action and reputational risk.

2. Governance Framework is Active

- National AI Authorities are now designated and operating.

- The AI Office within the European Commission is functioning as the central oversight body for GPAI.

Impact:

These bodies are not theoretical—they now have the power to investigate, request documentation, and even halt the use of AI systems. For financial services, where AI is often embedded deep in operations, this creates a real compliance and operational continuity risk if systems are suspended pending review.

3. Penalties Regime is Live

- Fines up to €35 million or 7% of global turnover for prohibited AI uses or severe non-compliance.

- Substantial fines also apply for failures in transparency, documentation, or reporting.

Impact:

Regulators are expected to target a handful of high-profile cases before the end of 2025 to set an example. A retail broker or fintech vendor in violation could find itself in the headlines, facing not only financial penalties but also serious client trust damage.

4. Member State Compliance Infrastructure Operational

- All Member States have now named their competent AI authorities and published penalty rules.

Impact:

You now have a domestic regulator with both the mandate and the tools to enforce the AI Act. This also means complaints and whistleblowing about AI use can be filed locally—and may lead to surprise inspections.

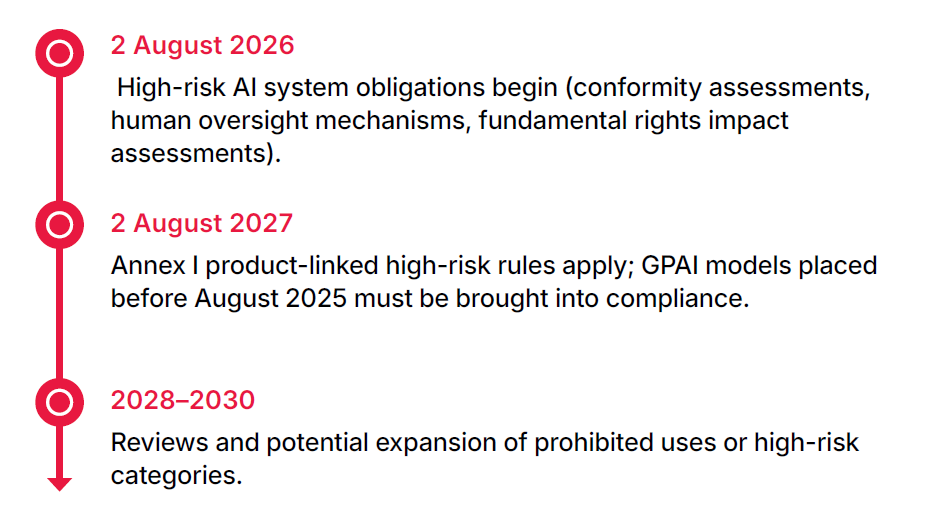

Timeline and Implementation

If you wait until 2026 to prepare for high-risk compliance, you’ll be caught in the certification bottleneck along with thousands of other firms—potentially delaying launches or even forcing temporary suspension of certain AI services.

Impact Analysis: Who’s Affected and How

Expert Opinion

“One major concern that came from the compliance teams is that they cannot understand a lot of the very complex documentation being distributed by the GPAI providers. GPAI providers often publish 50+ page PDFs written for a very technical audience, and the core risk is often about what isn't being disclosed."

“It's essential that EU companies look at which models are compliant before selecting them for any sort or purpose, especially for any ‘high risk’ use cases as defined in the EU AI Act.” — Andrew Gamino-Cheong, CTO & Co-Founder at Trustible

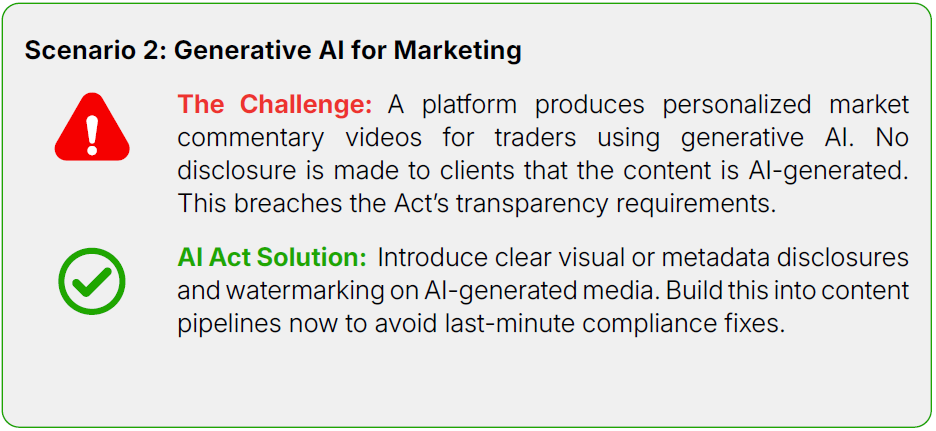

Real-World Scenarios

Key Takeaways

Integrated Risk Mapping:

A clear inventory of AI systems is no longer optional. Without it, you can’t know which obligations apply.

Vendor Chain Accountability:

In a B2B environment, your compliance is only as strong as your weakest supplier.

Transparency as Trust:

Clear AI disclosures can reassure clients and regulators—and differentiate your brand.

Documentation Discipline:

From this month onwards, missing GPAI documentation is a direct liability risk.

Early Prep for 2026:

Building assessment and oversight processes now will avoid operational delays when high-risk AI rules hit.